Teaching an RNN to write movie scripts

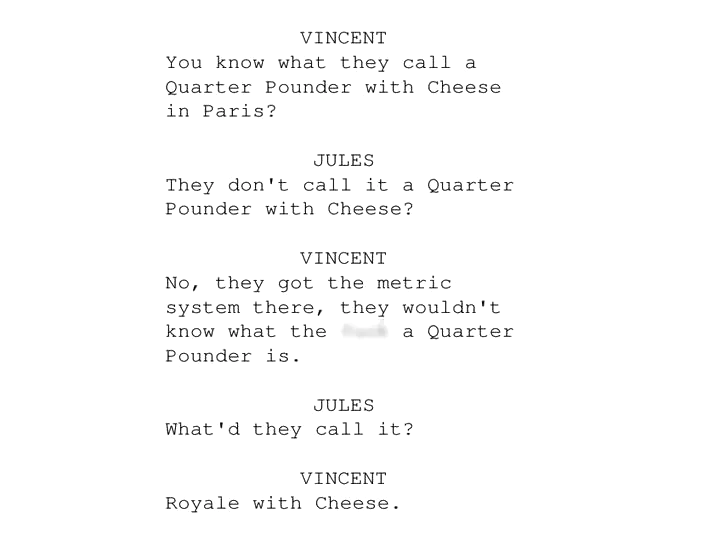

Royale with cheese scene from Pulp Fiction.

Royale with cheese scene from Pulp Fiction.

Note: all code and data for this project can be found in a GitHub repository

Around Christmas, I was home visiting family and sat through an overload of Hallmark Channel holiday movies. To me, Hallmark holiday movies all have the same plot and same characters with different names, so I joked that a well-trained neural net could easily write one and no one would know the difference.1 That joke made me wonder how hard it would be to train an RNN to write movie scripts, so I set out to try.

It turns out scripts for movies on the Hallmark Channel are hard to find, so I decided to use screenplays written by Quentin Tarantino instead. I couldn’t find a usable copy of Grindhouse: Death Proof, but I got vector PDFs for everything else – 12 out of 13 isn’t bad.

I converted the PDFs to text, cleaned up the text using a combination of sed and awk, embedded the characters as one-hot vectors, and fed that into a bidirectional LSTM. Words in all caps have special meaning in screenplays (names of characters, camera directions), so I embedded upper and lower case letters separately.

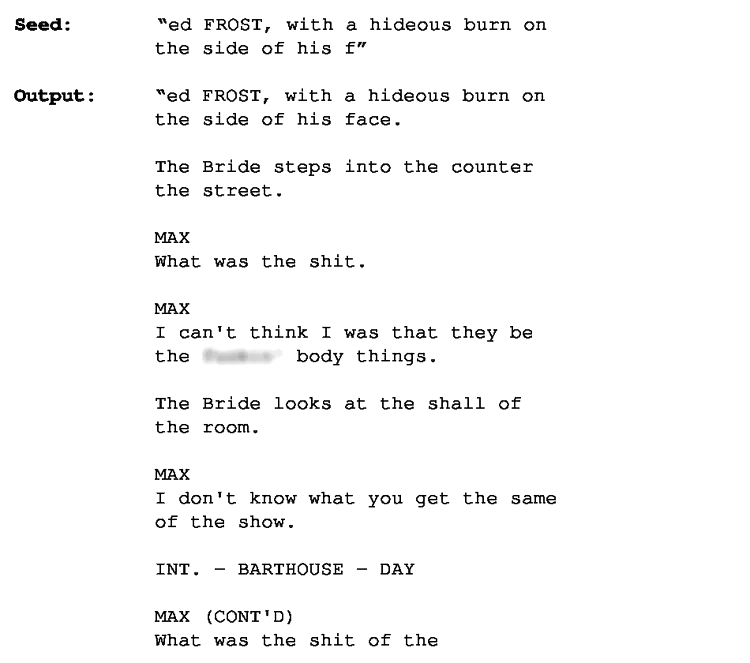

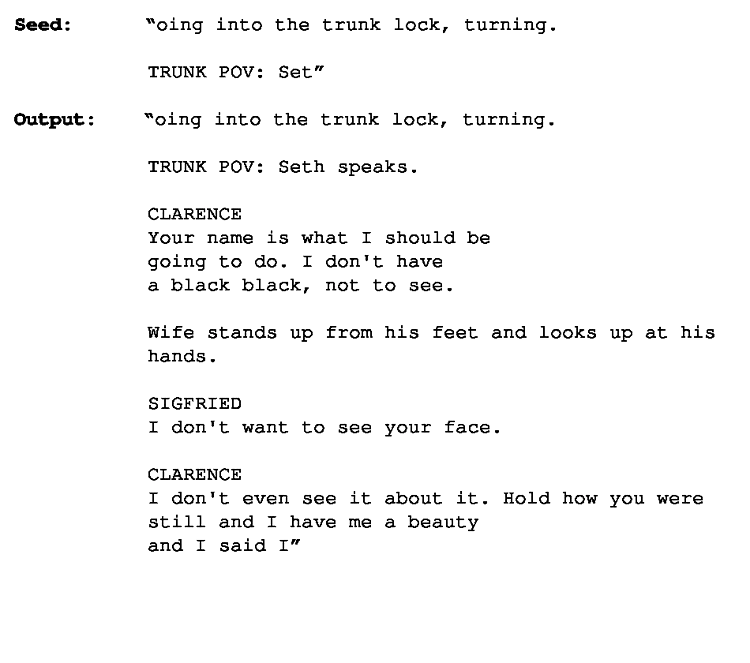

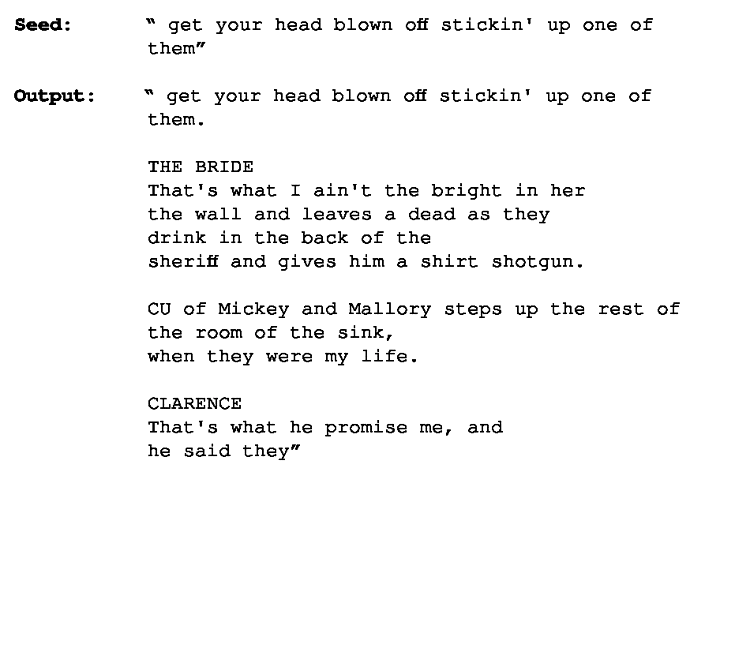

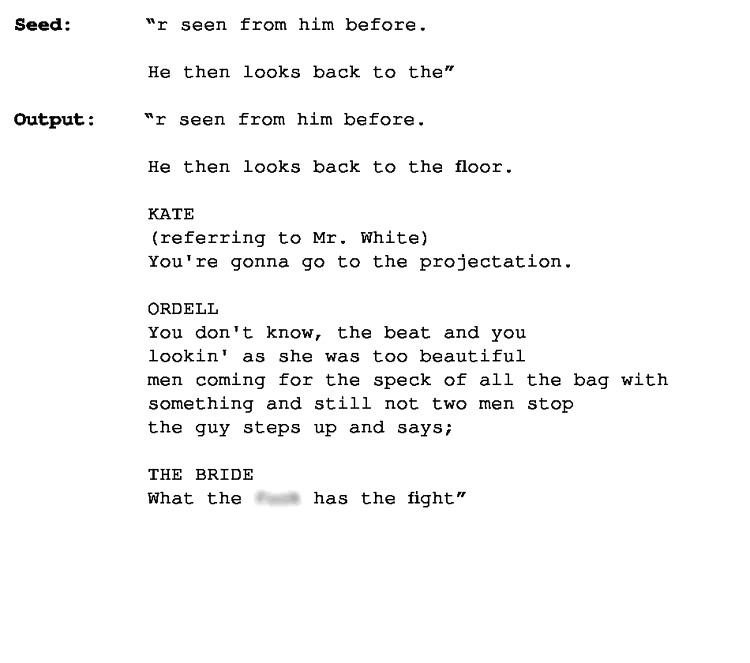

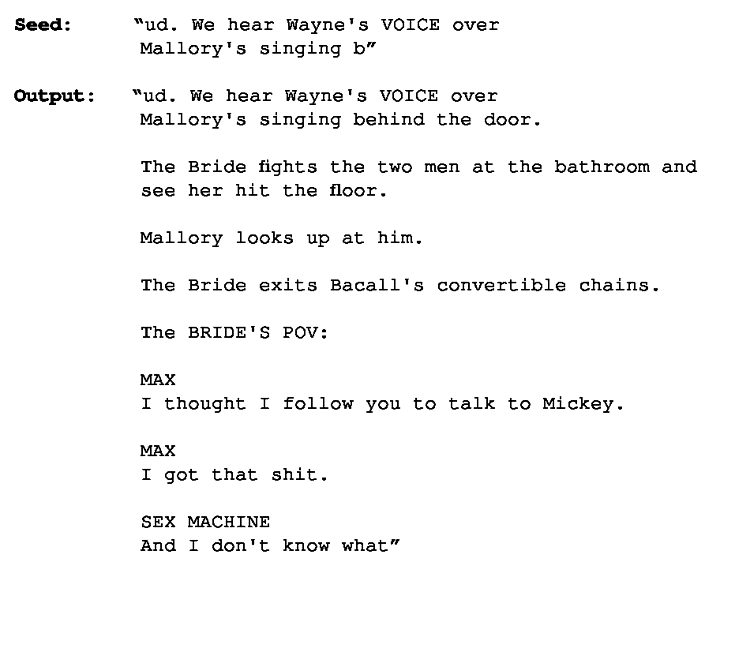

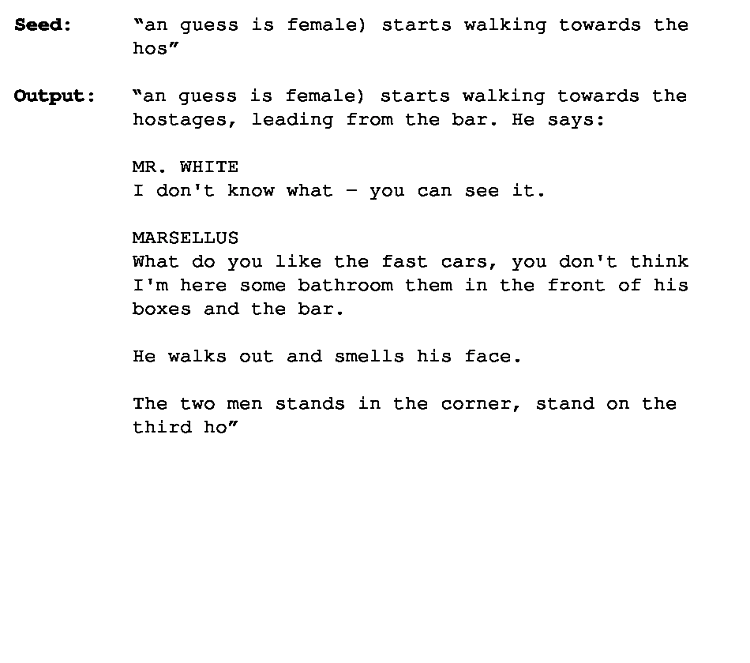

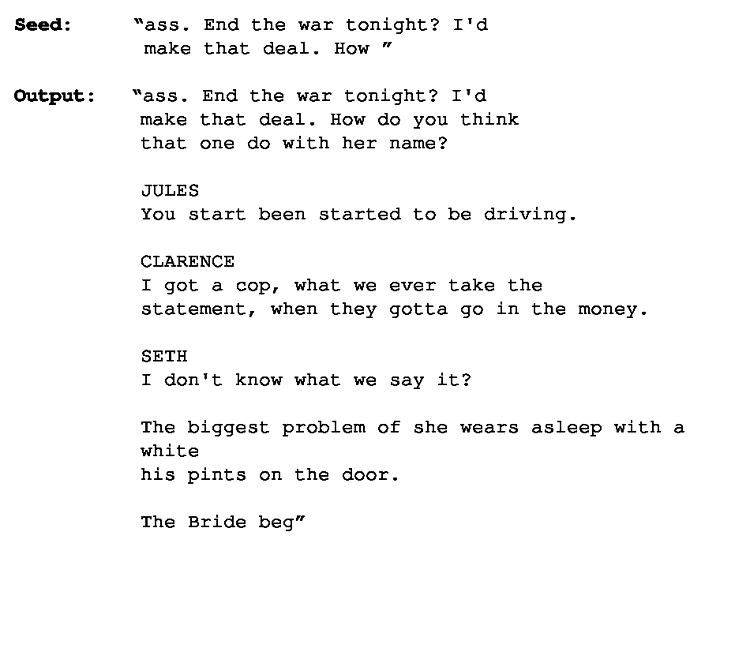

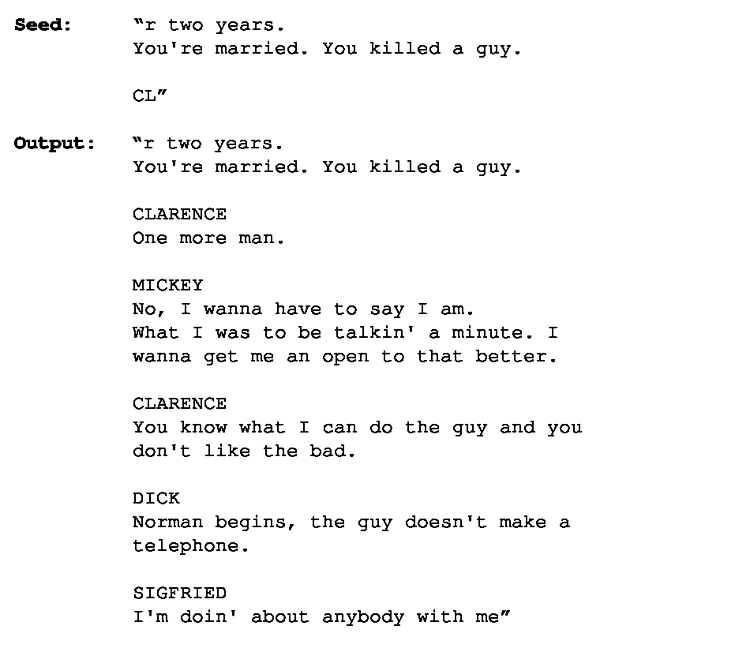

The finished model consists of 2 bidirectional LSTM layers – each with 512 nodes and 20% recurrent dropout – topped off by fully-connected Softmax layer with 82 nodes (there are 82 total characters in the model). The full details and all the code is available in the repo. For now, let’s see some sample output:

A few observations:

- The model is great at picking up on the general structure of a screenplay; characters exchange dialog and occasionally you get camera and scene instructions – “CU of Mickey” (ie, a close up shot of Mickey) and “INT. - BARTHOUSE - DAY” (ie, an interior shot at the “barthouse” during the day).

- Scenes are an amalgam of characters from all of Tarantino’s movies (“THE BRIDE” from Kill Bill, “ORDELL” from Jackie Brown, etc), but unsurprisingly it doesn’t create any new character names.

- It’s also pretty good at word completion (short-term memory). It completes “the burn on the side of his f” with “face” and “walking towards the hos” with “hostages”.

- I really like the “MAX … INT. - BARTHOUSE - DAY … MAX (CONT’D)” sequence, though I think that was coincidence more than anything. With an input sequence of 50 characters, the model could not have known that MAX was talking prior to the scene change.

I didn’t fully tune the model because I felt bad wasting cluster resources on a silly task, but it achieves 60% accuracy on the test set. That’s pretty good considering the messy text and paucity of data (1.7M chars total). It trained relatively quickly as well (9 epochs with early stopping).

Update 2019: I would be curious to see how well one of the “Sesame Street” models – ELMo, ERNIE, BERT, XLNet, RoBERTa, Transfo-XL, GPT-2, etc – would perform on the same task. Thomas Dehaene must have had the same thought regarding Hallmark movies, as he just posted this tutorial on his blog. He couldn’t find any screenplays either and used subtitles instead.