Scraping 5-min weather data from Weather Underground

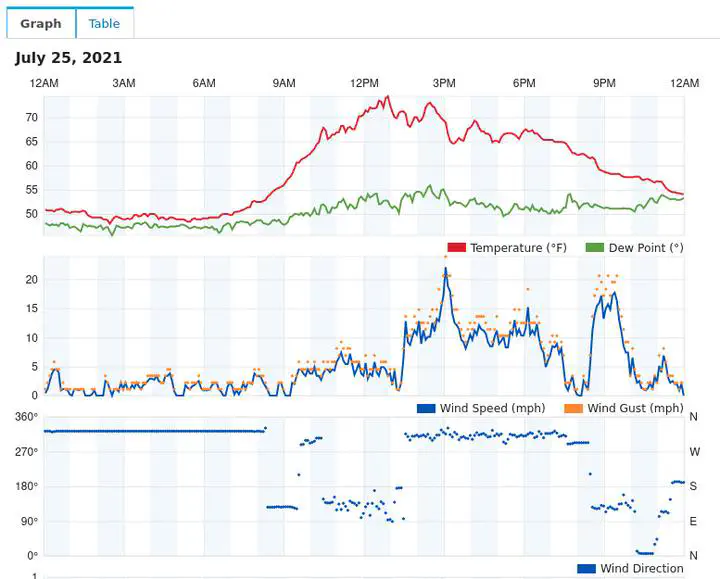

Weather Undergound stores data from over 250,000 personal weather stations across the world. Unfortunately, historical data are not easy to access. It’s possible to view tables of 5-min data from a single day – see this example from a station outside Crested Butte, Colorado – but if you try to scrape the http using something like Python’s requests library, the tables appear blank.

Weather Underground has a security policy that blocks automated requests from viewing data stored in each table. This is where Selenium WebDriver comes in. WebDriver is an toolbox for natively running web browsers, so when you render a page with WebDriver, Weather Underground thinks a regular user is accessing their website and you can access the full source code.

To run the script, the first thing to do is ensure that ChromeDriver is installed. Note that you have to match the ChromeDriver version to whichever version of Chrome is installed on your machine. It’s also possible to use something other than Chrome, for example geckodriver for Firefox or safaridriver for Safari.

Next, update the path to chromedriver in scrape_wunderground.py:

# Set the absolute path to chromedriver

chromedriver_path = '/path/to/chromedriver'

As long as BeautifulSoup and Selenium are installed, the script should work fine after that. However, there are a few important points to note about processing the data once it’s downloaded:

- All data is listed in local time. So summer data is in daylight savings time and winter data is in standard time.

- Depending on the quality of the station,

- All pressure data is reported as sea-level pressure. Depending on the weather station, it may be possible to back-calculate to absolute pressure; some manufacturers (e.g., Ambient Weather WS-2902) use a constant offset whereas others (e.g., Davis Vantage Pro2) perform a more complicated barometric pressure reduction using the station’s 12-hr temperature and humidity history.

The full Python script is available here but is also included below.

"""Module to scrape 5-min personal weather station data from Weather Underground.

Usage is:

>>> python scrape_wunderground.py STATION DATE

where station is a personal weather station (e.g., KCAJAMES3) and date is in the

format YYYY-MM-DD.

Alternatively, each function below can be imported and used in a separate python

script. Note that a working version of chromedriver must be installed and the absolute

path to executable has to be updated below ("chromedriver_path").

Zach Perzan, 2021-07-28"""

import time

import sys

import numpy as np

import pandas as pd

from bs4 import BeautifulSoup as BS

from selenium import webdriver

# Set the absolute path to chromedriver

chromedriver_path = '/path/to/chromedriver'

def render_page(url):

"""Given a url, render it with chromedriver and return the html source

Parameters

----------

url : str

url to render

Returns

-------

r :

rendered page source

"""

driver = webdriver.Chrome(chromedriver_path)

driver.get(url)

time.sleep(3) # Could potentially decrease the sleep time

r = driver.page_source

driver.quit()

return r

def scrape_wunderground(station, date):

"""Given a PWS station ID and date, scrape that day's data from Weather

Underground and return it as a dataframe.

Parameters

----------

station : str

The personal weather station ID

date : str

The date for which to acquire data, formatted as 'YYYY-MM-DD'

Returns

-------

df : dataframe or None

A dataframe of weather observations, with index as pd.DateTimeIndex

and columns as the observed data

"""

# Render the url and open the page source as BS object

url = 'https://www.wunderground.com/dashboard/pws/%s/table/%s/%s/daily' % (station,

date, date)

r = render_page(url)

soup = BS(r, "html.parser",)

container = soup.find('lib-history-table')

# Check that lib-history-table is found

if container is None:

raise ValueError("could not find lib-history-table in html source for %s" % url)

# Get the timestamps and data from two separate 'tbody' tags

all_checks = container.find_all('tbody')

time_check = all_checks[0]

data_check = all_checks[1]

# Iterate through 'tr' tags and get the timestamps

hours = []

for i in time_check.find_all('tr'):

trial = i.get_text()

hours.append(trial)

# For data, locate both value and no-value ("--") classes

classes = ['wu-value wu-value-to', 'wu-unit-no-value ng-star-inserted']

# Iterate through span tags and get data

data = []

for i in data_check.find_all('span', class_=classes):

trial = i.get_text()

data.append(trial)

columns = ['Temperature', 'Dew Point', 'Humidity', 'Wind Speed',

'Wind Gust', 'Pressure', 'Precip. Rate', 'Precip. Accum.']

# Convert NaN values (stings of '--') to np.nan

data_nan = [np.nan if x == '--' else x for x in data]

# Convert list of data to an array

data_array = np.array(data_nan, dtype=float)

data_array = data_array.reshape(-1, len(columns))

# Prepend date to HH:MM strings

timestamps = ['%s %s' % (date, t) for t in hours]

# Convert to dataframe

df = pd.DataFrame(index=timestamps, data=data_array, columns=columns)

df.index = pd.to_datetime(df.index)

return df

def scrape_multiattempt(station, date, attempts=4, wait_time=5.0):

"""Try to scrape data from Weather Underground. If there is an error on the

first attempt, try again.

Parameters

----------

station : str

The personal weather station ID

date : str

The date for which to acquire data, formatted as 'YYYY-MM-DD'

attempts : int, default 4

Maximum number of times to try accessing before failuer

wait_time : float, default 5.0

Amount of time to wait in between attempts

Returns

-------

df : dataframe or None

A dataframe of weather observations, with index as pd.DateTimeIndex

and columns as the observed data

"""

# Try to download data limited number of attempts

for n in range(attempts):

try:

df = scrape_wunderground(station, date)

except:

# if unsuccessful, pause and retry

time.sleep(wait_time)

else:

# if successful, then break

break

# If all attempts failed, return empty df

else:

df = pd.DataFrame()

return df